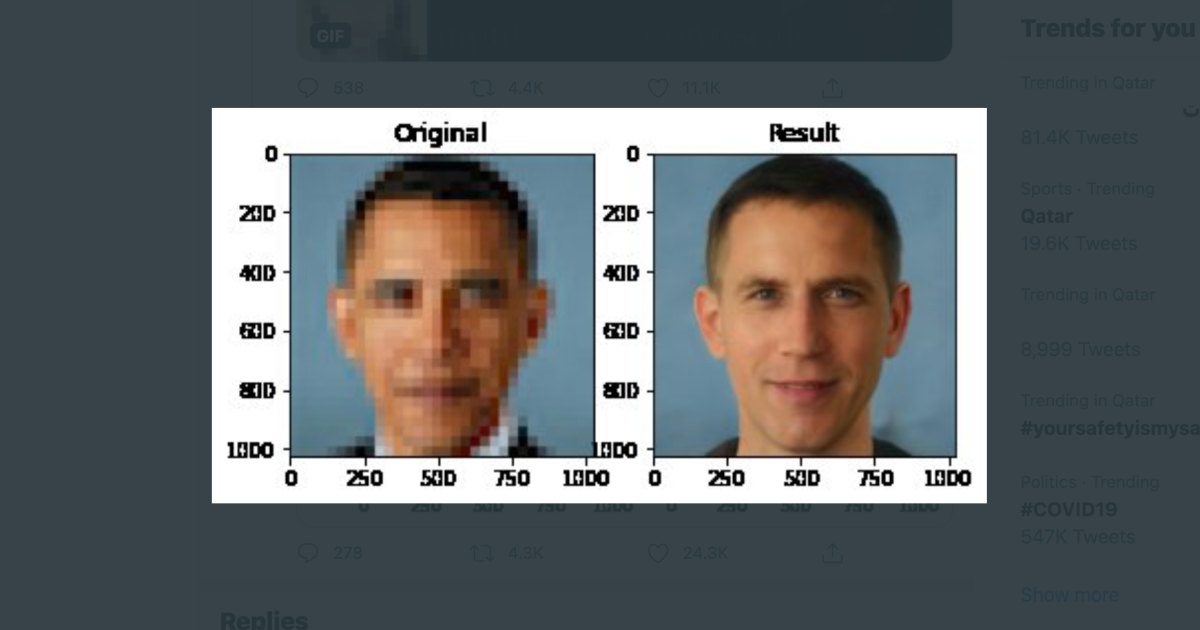

A low-resolution image of Barack Obama - the first black president of the United States - was introduced into an algorithm designed to generate pixelated faces, the result being a white man, and the newly published and reproduced image illustrates the biases inherent in AI research.

Bias is not just about Obama, when using the same algorithm to create high-resolution images of actress Lucy Liu or Congresswoman Ocasso Cortez from low-resolution inputs the resulting faces will appear white clearly.

But what causes these outputs? And how does the AI bias occur?

First we need to know a little bit about the technology used here. The software that generates these images is an algorithm called "PULSE", which uses a technique known as "upgrade the level of visual data processing".

Upgrading is like the “zooming and optimizing” process that we see on TV and movies, but unlike Hollywood, new programs cannot create new data from nothing, and those programs employ machine learning to fill in the blanks and convert a low-resolution image to a high-resolution image.

To do this work, Plus needs another algorithm, StyleGAN, which was created by researchers from Nevada, and it is the algorithm responsible for creating realistic human faces for people who are not present, which you can see on sites like (ThisPersonDoesNotExist.com) , Which are so realistic faces that they are often used to create fake social media profiles.

What Plus is doing is using Style Jean to “visualize” the high-resolution version of the dotted input for a poor-quality image, and it does not do this by “improving” the original low-resolution image, but by creating a completely new high-definition face, which appears when It is divided into pixels similar to the one the user entered.

An image of @BarackObama getting upsampled into a white guy is floating around because it illustrates racial bias in #MachineLearning. Just in case you think it isn't real, it is, I got the code working locally. Here is me, and here is @AOC. pic.twitter.com/kvL3pwwWe1

- Robert Osazuwa Ness (@osazuwa) June 20, 2020

Plus creators say that when using the algorithm to extend the range of pixel images, the algorithm often generates faces with Caucasian features.

The creators of the algorithm wrote on "Github" that it seems that Plus produces more white faces more frequently than the faces of people of color, and this bias is likely to be inherited from Style Jean's bias-trained set of data although it may be There are other factors that we do not realize. "

In other words, given the nature of the data that Style Style has been trained on, when it tries to create a face that looks like a dotted input image, it is automatically oriented to the white shapes.

This problem is very common in machine learning, and it is one of the reasons for the poor performance of facial recognition algorithms in the case of non-white and female faces, so the data used to train artificial intelligence is often skewed toward white men, and when the program sees that the data is not in this demographic, its guesses seem Bad, it is white men who dominate AI research.

The researchers did not notice the algorithm output bias before the tool became widely available, and this indicates that this type of technology should not be deployed before it is well tested.