The whistleblower Thomas Le Bonniec and the journalist of Télérama Olivier Tesquet explain Monday in "Media Culture" how Apple and Google treat the data collected from their voice assistants.

A secret work which questions the respect for our private life by these large firms of new technologies.

INTERVIEW

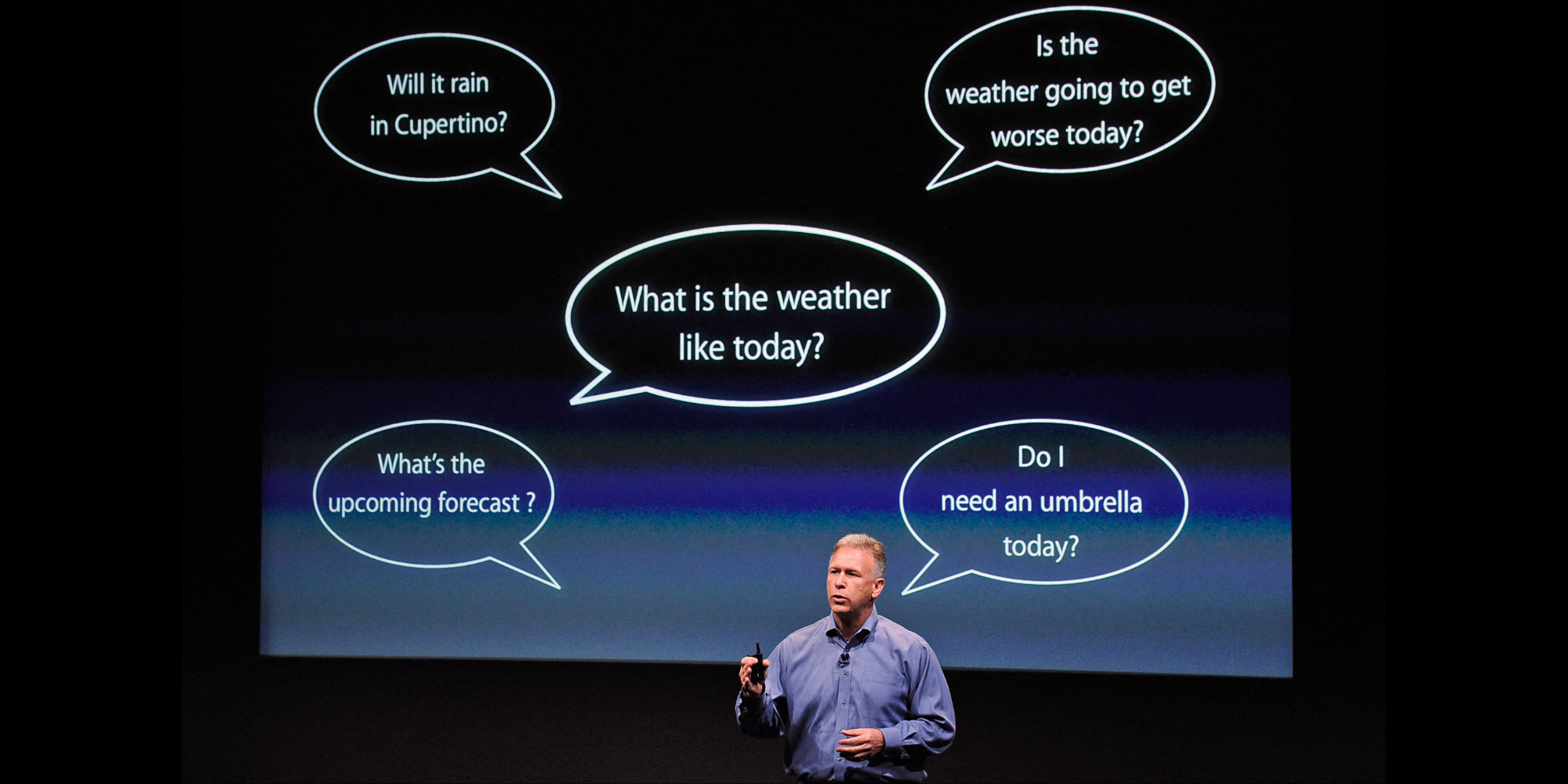

What happens when we ask Siri or "Ok Google" a question, the voice assistants on our cell phones?

The least that can be said is that the big companies in the sector like Apple and Google remain rather secret on the subject.

Thomas Le Bonniec worked on the processing of the data thus collected.

This whistleblower helped Olivier Tesquet, journalist at

Télérama

, in his investigation on the subject.

They alert us Monday in

Culture Médias

on the risks of voice assistants for our private life.

>> Find Culture Médias in replay and podcast here

"You have to feed the machine, and to feed it you need human cogs, of which I was a part."

This is how Thomas Le Bonniec sums up his 10 weeks spent, between April and May 2019, in an Irish data center of an Apple subcontractor.

His job was to listen to and process the recordings from requests made via the voice assistant Siri.

1,300 records per day and per employee

A job that the apple company wanted to keep secret.

"The confidentiality clause was very clear: we could not talk about our work with colleagues, our family, or our friends. We had to remain silent," explains Thomas Le Bonniec.

The Frenchman had to process a minimum of 1,300 personal recordings per day, each of which lasted from one second to two minutes.

A process aimed at verifying and improving Siri's understanding of the recordings, but also at annotating the pronounced keywords likely to interest Apple: names and numbers of contacts, names of places, artists, etc.

"All big companies act the same"

Thomas Le Bonniec thus had access to the privacy of Siri users.

"There was sexuality, pornography, very intimate things like when people talk about their cancer, multiple sclerosis, chemotherapy, or things about their family relationships," he recalls.

This content is all the more intimate as the voice assistants are triggered regularly by mistake.

For journalist Olivier Tesquet, who investigated the subject, this method of processing private data is very widespread.

"All the big companies that have voice assistants act the same way, because to improve their responses you have to train them. The only way to do that is to have human ears," he says.

"By dint of the invisible work of humans, we improve the machine", adds Thomas Le Bonniec.

>> READ ALSO -

Helpful, cheerful, ironic… Yes, voice assistants have a personality

According to the latter, things have changed little since 2019 and his departure from Apple's subcontractor due to burnout.

"There have been some changes, cosmetic and marginal," he said.

"Apple has decided to do without subcontractors. They will apparently hide the names and phone numbers of the records when they are processed. Above all, users must confirm that they are willing to participate in improving the service." .

According to him, these changes do not solve the problem of respect for privacy.

He recalls that hundreds of millions of records were processed around the world before the implementation of these new rules.